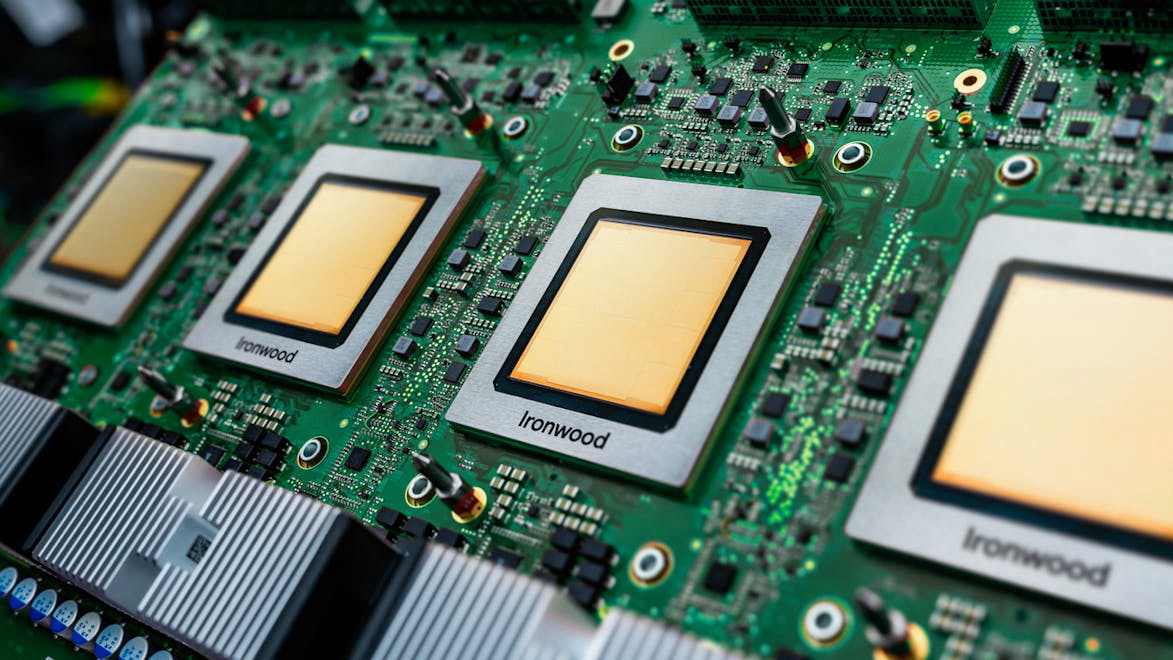

Ironwood

The first Google TPU for the age of inference

Listed in categories:

Hardware

Description

Ironwood is Google Cloud's seventh-generation Tensor Processing Unit (TPU), designed specifically for inference tasks. It is the most powerful and energy-efficient TPU to date, capable of supporting advanced AI models at scale. Ironwood enables proactive generation of insights and interpretation, moving beyond traditional responsive AI models. With configurations of up to 9216 chips, Ironwood delivers unparalleled performance, cost, and power efficiency for demanding AI workloads.

How to use Ironwood?

To use Ironwood, developers can leverage Google's Pathways software stack to efficiently harness the combined computing power of multiple Ironwood TPUs, enabling seamless scaling for AI applications.

Core features of Ironwood:

1️⃣

High performance and energy efficiency for AI workloads

2️⃣

Support for large language models and advanced reasoning tasks

3️⃣

Enhanced memory capacity and bandwidth for processing larger datasets

4️⃣

Advanced InterChip Interconnect (ICI) for efficient communication

5️⃣

Optimized for both training and inference workloads

Why could be used Ironwood?

| # | Use case | Status | |

|---|---|---|---|

| # 1 | Training large language models (LLMs) | ✅ | |

| # 2 | Running advanced reasoning tasks in AI applications | ✅ | |

| # 3 | Processing large datasets for financial and scientific domains | ✅ | |

Who developed Ironwood?

Google Cloud is a leading provider of cloud computing services, known for its advanced AI capabilities and infrastructure. With over a decade of experience in delivering AI compute, Google Cloud integrates cutting-edge technology into its services, supporting billions of users globally.