DeepGEMM

Unlock Maximum FP8 Performance on Hopper GPUs

Listed in categories:

GitHubArtificial IntelligenceOpen Source

Description

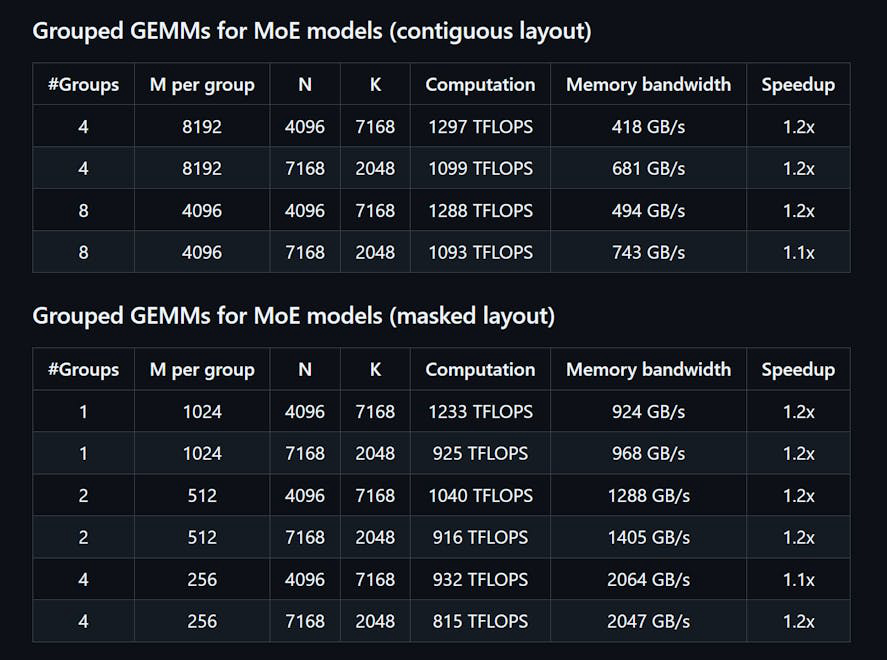

DeepGEMM is a library designed for clean and efficient FP8 General Matrix Multiplications (GEMMs) with fine-grained scaling, as proposed in DeepSeekV3. It supports both normal and Mix-of-Experts (MoE) grouped GEMMs. Written in CUDA, the library compiles all kernels at runtime using a lightweight Just-In-Time (JIT) module, requiring no compilation during installation. DeepGEMM exclusively supports NVIDIA Hopper tensor cores and employs CUDA core two-level accumulation promotion to address imprecise FP8 tensor core accumulation. Despite its lightweight design, DeepGEMM's performance matches or exceeds expert-tuned libraries across various matrix shapes.

How to use DeepGEMM?

To use DeepGEMM, install the library via Python with 'python setup.py install'. Import 'deepgemm' in your Python project and call the appropriate GEMM functions for your matrix operations. Ensure your environment meets the requirements for CUDA and PyTorch versions.

Core features of DeepGEMM:

1️⃣

Supports normal and Mix-of-Experts (MoE) grouped GEMMs

2️⃣

Written in CUDA with runtime kernel compilation

3️⃣

Optimized for NVIDIA Hopper tensor cores

4️⃣

Utilizes two-level accumulation promotion for FP8

5️⃣

Lightweight design with a single core kernel function

Why could be used DeepGEMM?

| # | Use case | Status | |

|---|---|---|---|

| # 1 | Efficient matrix multiplication for deep learning models | ✅ | |

| # 2 | Optimizing performance in inference tasks | ✅ | |

| # 3 | Utilizing FP8 precision for memory-efficient computations | ✅ | |

Who developed DeepGEMM?

DeepGEMM is developed by a team including Chenggang Zhao, Liang Zhao, Jiashi Li, and Zhean Xu, who are focused on providing efficient solutions for matrix multiplication in deep learning applications.